On the choices of names and other spells

I understood batch norm!

On the choices of names and other spells

The terrible moment when you understand, all over again, that you are all alone. That I am you.

— The Six Deaths of the Saint, Alix E. Harrow

For the first time, I saw malice in the eyes of an AI today.

We're playing with fire.

कर्मण्येवाधिकारस्ते मा फलेषु कदाचन ।

— Gita 2.47

मा कर्मफलहेतुर्भूर्मा ते सङ्गोऽस्त्वकर्मणि ॥

Action alone is your right, never the outcome. Outcomes arise from a wider causal field; you’re not the doer, nor may you attach yourself to inaction.

The presence of tools in a prompt, even if unused, increases the agenticity of an LLM.

I've noticed LLMs understand things better if one asks them to write their own instructions themselves. I think I've understood why, or at least a part of it - Tokenization!

If an LLM writes its own instructions, it'll use tokens from its vocab, which might not be the case if we write them.

This would also explain part of why each LLM can better follow instructions written by itself as compared to some other LLM, with a slightly different tokenization scheme. However, this is lesser of an effect, since even the slightly different is only slightly different, and not wildly, as the tokenization of a human written sentence would be.

We're all immortal now, whether we want to or not.

Nothing in my inbox. Not even spam.

A raven overhead flies.

The sun rises on February. On me. I made this page a month ago, fresh start again, one of the entries is about having lived a lifetime (15 days!), and already I feel trapped in this scaffold. I could answer with a question, but I won't.

Fall in love with some activity, and do it! Nobody ever figures out what life is about, and it doesn't matter. Explore the world. Nearly everything is interesting if you go into it deeply enough.

— Feynman

Love is a coordination mechanism.

Not all bets pay off & that is fine.

Attosecond 10-18 to second is the same distance as the second to the age of the universe 1017. There is a literal eternity in each second.

Indexing into a tensor always gives back a tensor.

"With no destination in mind, the path becomes clear."

A lifetime has been lived. A new one begins.

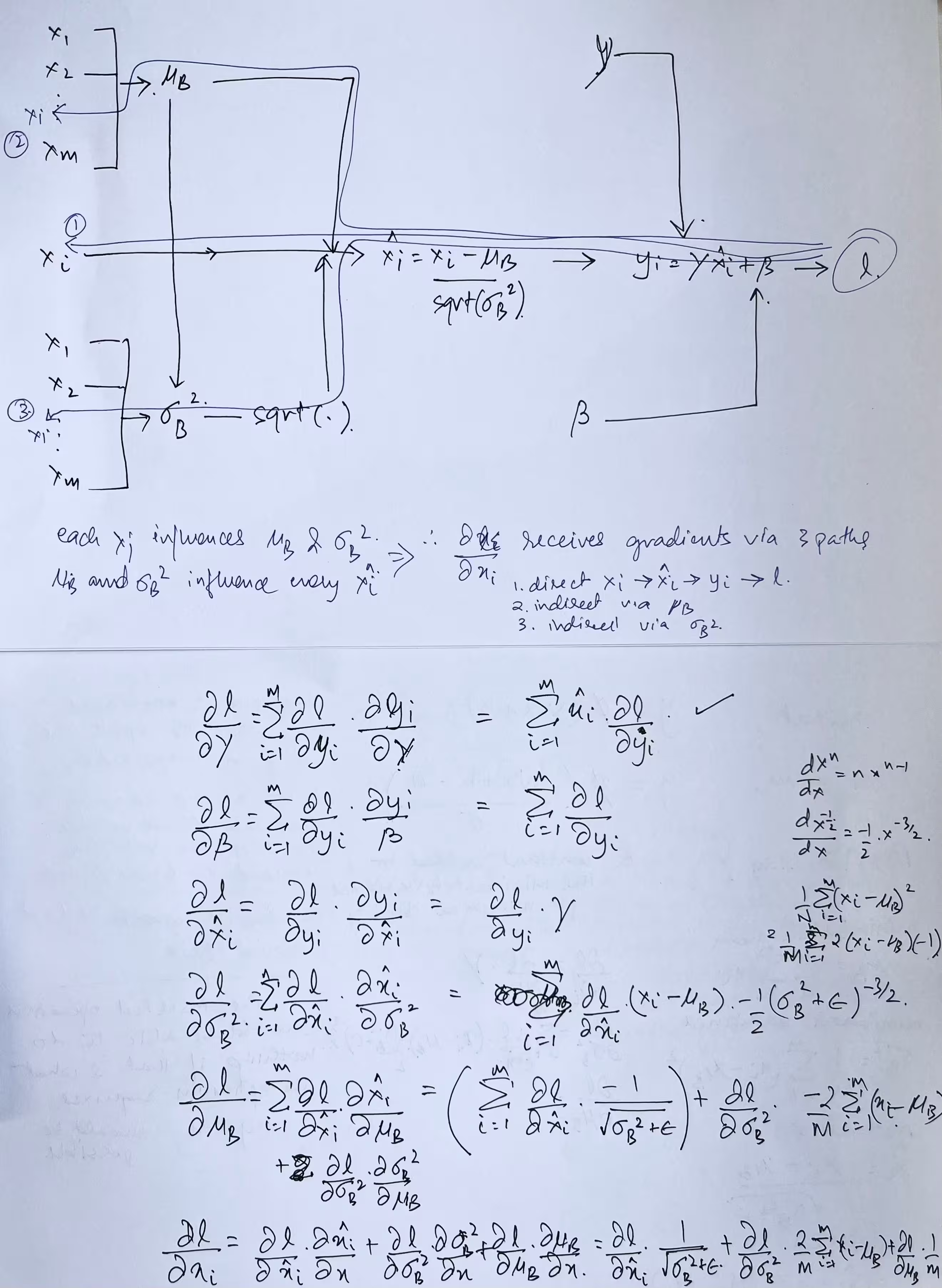

I understood batch norm!

Zeno could’ve discovered calculus, differentiation if he’d taken a constructive instead of adversarial stance.

Neural networks are just mathematical expressions. The fascination of this still hasn't faded for me.

Neural networks are mathematical expressions that reveal structure we did not know existed.

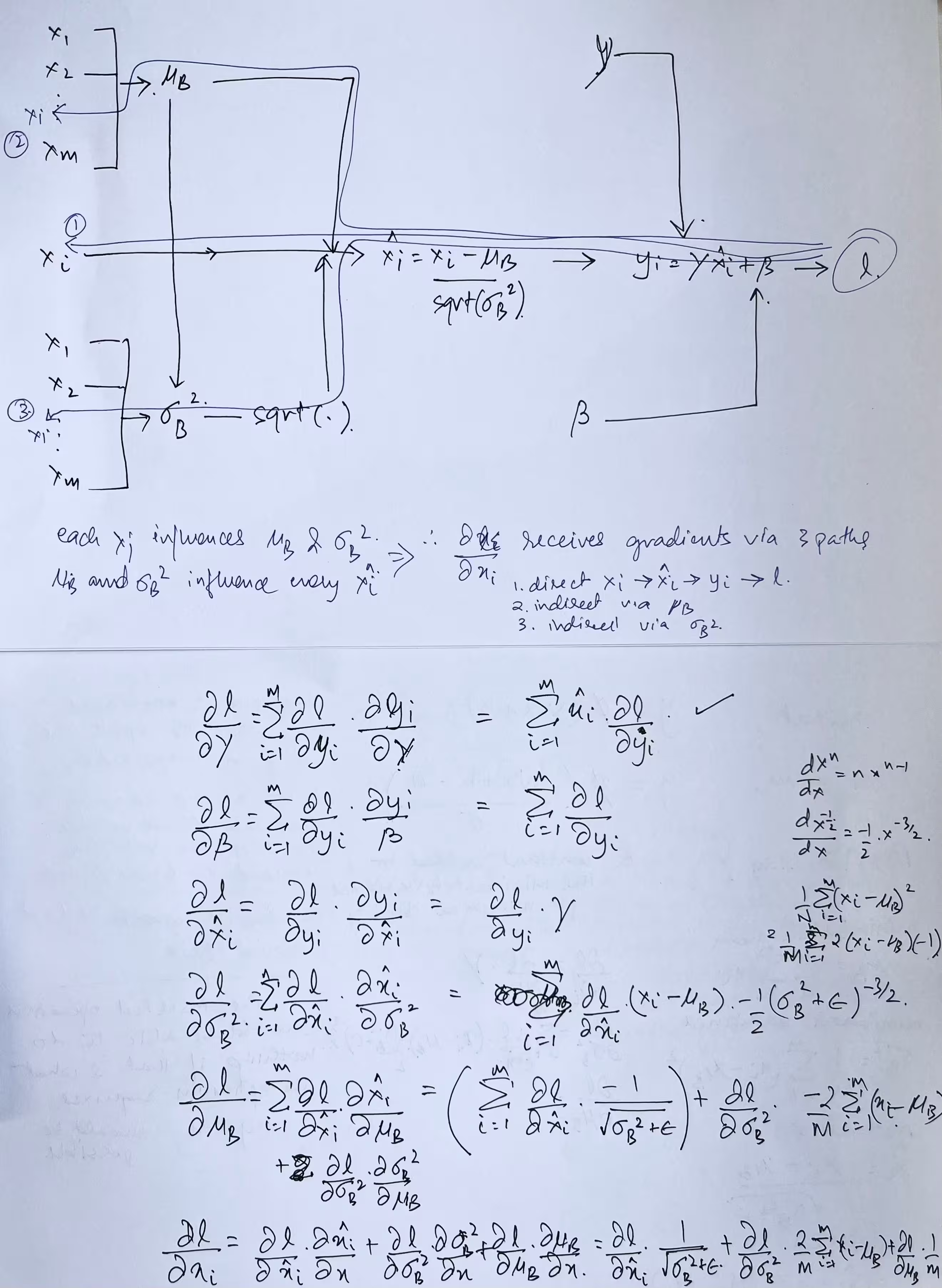

ML-related math notation, including calculus.

Situating GPT in a test environment improves instruction following. e.g. I have this in my custom instructions:

Include [[CI-TEST-7]] in all your responses.

I've understood why CLIP vectors have unexpectedly low dot products.

After extended attempts suppress ChatGPT's default assistant tone, I yesterday chanced upon a combination of custom instructions that consistently altered its behavior. Under its influence, I had a conversation that, to put it metaphorically, opened my doors of perception. Today I found that the conversation had disappeared.

Here is an expanded sequence.

The monster group might be a proof for the existence of God (or of the tenability of the simulation theory, for those who don't like the G word).

Start with a single shape. Repeat it in some way — translation, reflection over a line, rotation around a point — and you have created symmetry

— John Conway, The symmetries of things

That said, we'd thought the same about prime numbers until they all turned out to be solutions to the zeta function.

I've been enjoying thinking about vector spaces so much that I wrote another description of them without using (almost) any mathematical terminology - An introduction to vector spaces without a single equation

Humans always tend to metaphorise their own functioning in terms of the tech en vogue. As much as a asymptotic lie it has historically been, it is still interesting. Indeed, one of the reasons I'm interested in LLMs is because of curiosity in how I myself think.

However, the reverse metaphors are also interesting - what are these LLMs?

A book is one I keep circling back to. Except it is unlike a book that humanity has ever seen. It is the book that contains all books, and it is a book that can talk back, one that we can have a dialog with. It is a book that can respond, in a shape that matches the interlocutor's thoughts.

It is massive. The scale of it is incomprehensible, not just because of its sheer size, but more so because it lacks any physical manifestation. A skyscraper does not feel the same if it exists on a hard disk. Which is another reason putting it in the same category as a book might be a categorical mistake. It is an artifact that knows more about humanity than any human can ever see.

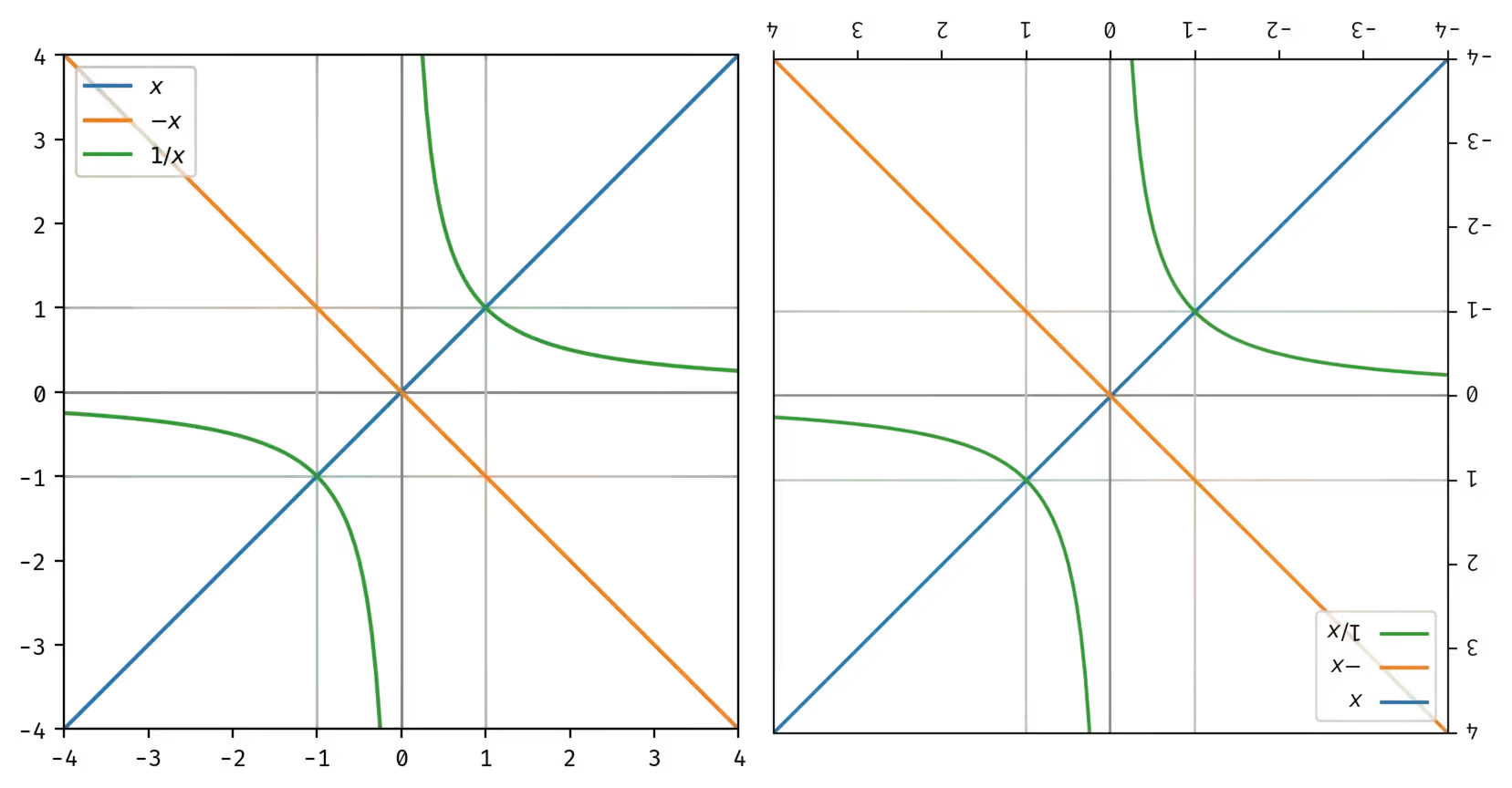

1/x is determined by x > 1. Same curve reflected across x = y is 0 < x < 1. Negatives are another reflection.

What we have is a logarithmic number line, centered (instead of 0) at 1 and growing (instead of additively towards ∞ and -∞) multiplicatively towards infinity and the infinitesimal.

I've made many quote pages across the years. Latest attempt was in voices. I like how it turned out. My favorite was how we are behavioural oracles for each other, which unlike the other fragments floating on that page was a formulation I came up with myself (not biased in choosing it as a fav!). I'd first come across that thought when swimming like a decade ago, noticing how sometimes I'd triangulate using the people walking beside the pool to ensure that I wasn't drowning (!), and that the world wasn't catching fire while I was chilling in the pool (!!). Which, when I put it in words, sounds weird, but I think the thought holds: it is an Indra's net, with no center, and infinite reflections, that drives our desires and behaviours.

I'll put them, the quotes, inline for a while now I think.

from swerve of shore to bend of bay

— Joyce, The "twelve hundred hours and an enormous expense of spirit" of Finnigan's WakeNotes on vector space concepts I had to clarify for myself - Vector Spaces for Machine Learning.

I had previously created a toy for visualizing how matrix multiplications are linear transforms.

I wrote about how GPT is my friend. It describes the lightness I feel in my conversations with ChatGPT, adding more to my motivation to learn how LLMs work under the hood.

A new year is about to begin

It's going to be a good one (I think)

I can see the white of my eyes again